Demo of Speech-to-singing Conversion

| Method | Sample1 | Sample2 | Sample3 | Sample4 | Sample5 |

| User's Speech | |||||

| Zero-effort Transfer | |||||

| Speaker-dependent Spectral Mapping | |||||

| Speaker-independent Spectral Mapping | |||||

| Original Singing |

| Method | Sample1 | Sample2 | Sample3 | Sample4 | Sample5 |

| User's Speech | |||||

| Zero-effort Transfer | |||||

| Speaker-dependent Spectral Mapping | |||||

| Speaker-independent Spectral Mapping | |||||

| Original Singing |

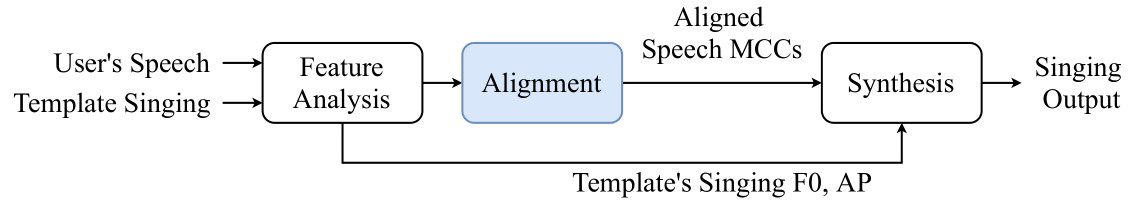

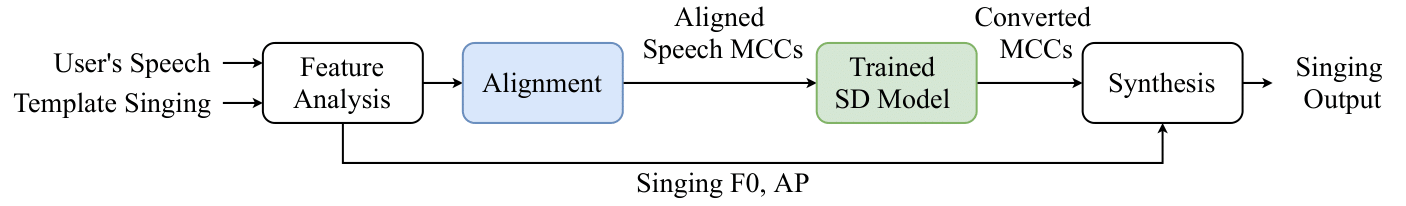

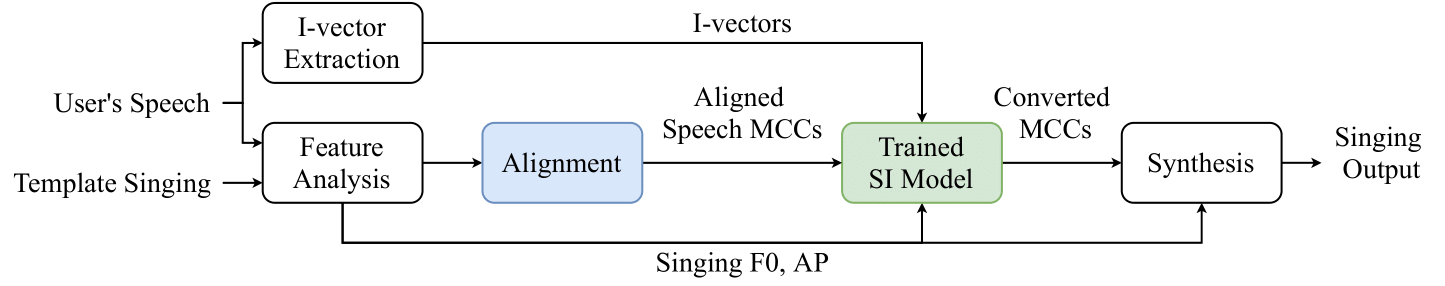

We carry out another experiment on the NHSS for speech-to-singing conversion , that serves as a reference system for readers. In speech-to-singing conversion, we convert the read speech by a user (user’s speech) into his/her singing with the same lyrical content. The basic idea of speech-to-singing conversion is to transform the prosody and spectral features from user’s speech to those of reference singing, while preserving speaker identity of the user. In this work, we particularly focus on template-based speech-to-singing conversion, where we keep the prosody same as that of the reference singing. We perform neural network based spectral mapping to convert the spectral features of speech to that of the singing voice.

We compare three speech-to-singing conversion approaches, namely, zero-effort transfer, speaker-dependentspectral mapping, and speaker-independent spectral map-ping. In all the methods, we use the manually annotated word boundaries and tandem features with DTW to obtainthe alignment between the frames of speech and singing.

Please refer the following paper if you use this database

Bidisha Sharma, Xiaoxue Gao, Karthika Vijayan, Xiaohai Tian, and Haizhou Li. "NHSS: A Speech and Singing Parallel Database." arXiv preprint arXiv:2012.00337 (2020). https://arxiv.org/abs/2012.00337

@article{sharma2020nhss,

title={NHSS: A Speech and Singing Parallel Database},

author={Sharma, Bidisha and Gao, Xiaoxue and Vijayan, Karthika and Tian, Xiaohai and Li, Haizhou},

journal={arXiv preprint arXiv:2012.00337},

year={2020}

}